ADAM

ADAM

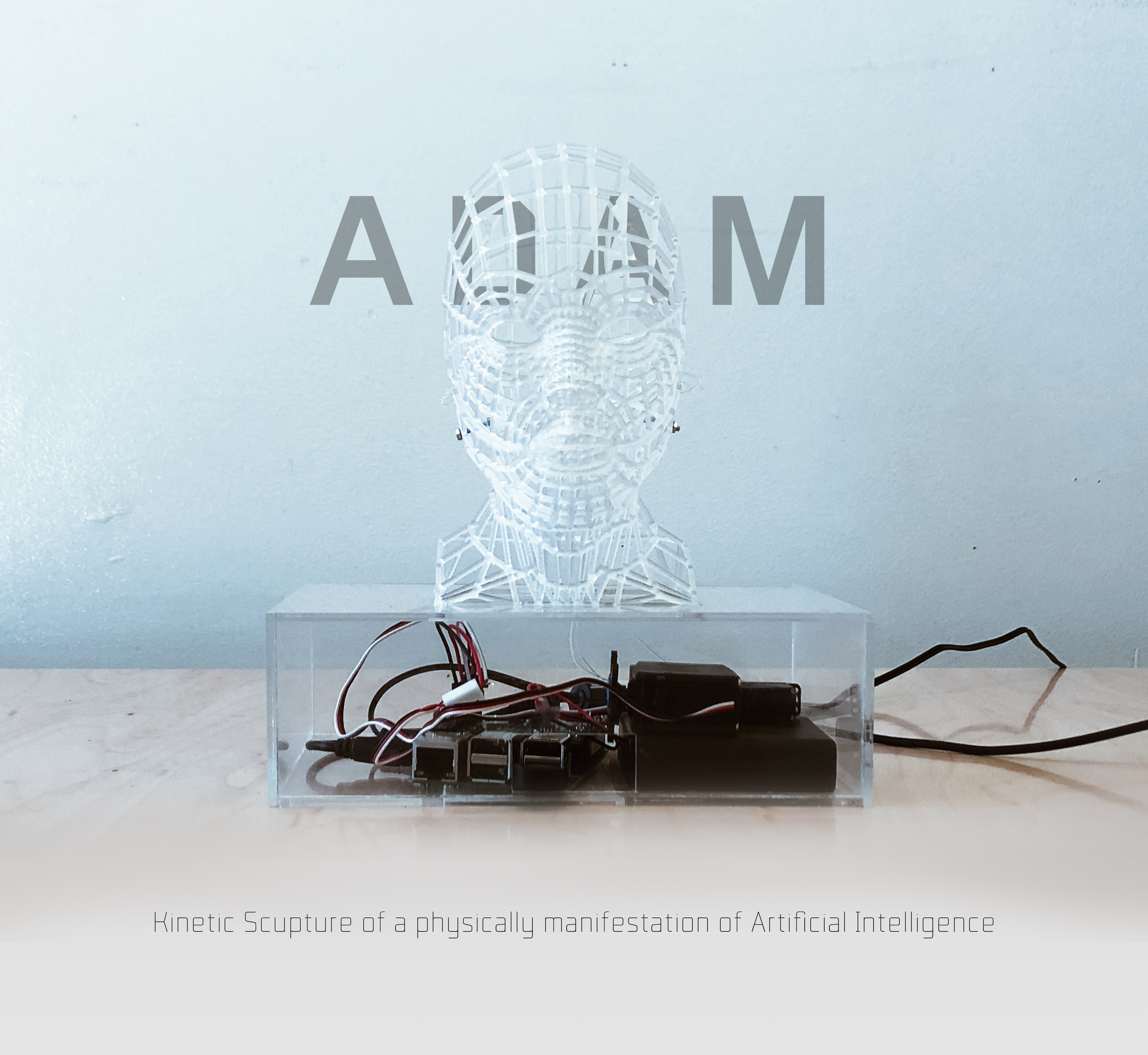

ADAM, Pronunciation: /aˈdam/, [aˈðãm], is a physical manifestation of Artificial Intelligence that challenges what, or rather, who we are talking to. Who is he if he exists beyond the virtuality?

ADAM is a fully 3D Printed Kinetic Sculpture and will consist of mechanism that will allow him to move. Alexa will be installed on a Raspberry Pi to control the audio networking aspect and that data will be referenced to create movement on the sculpture.

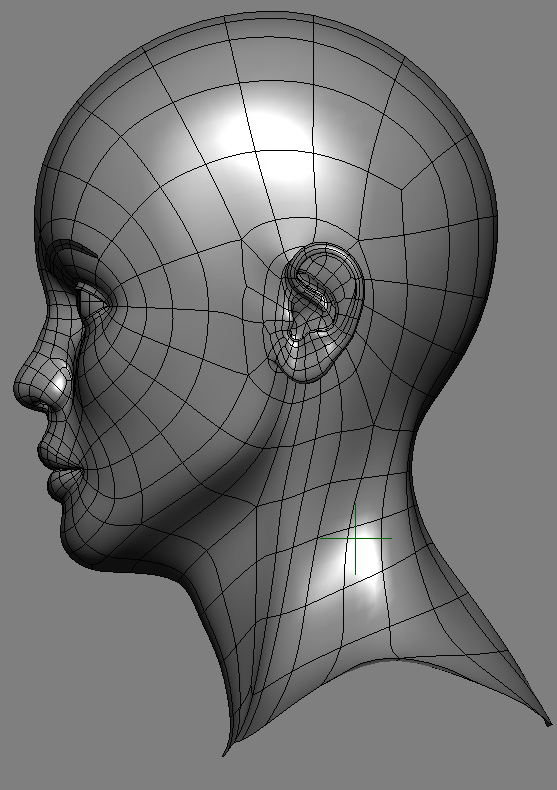

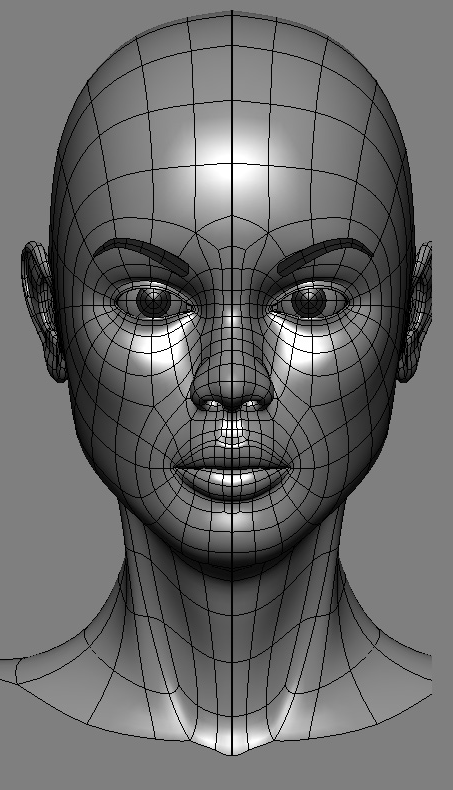

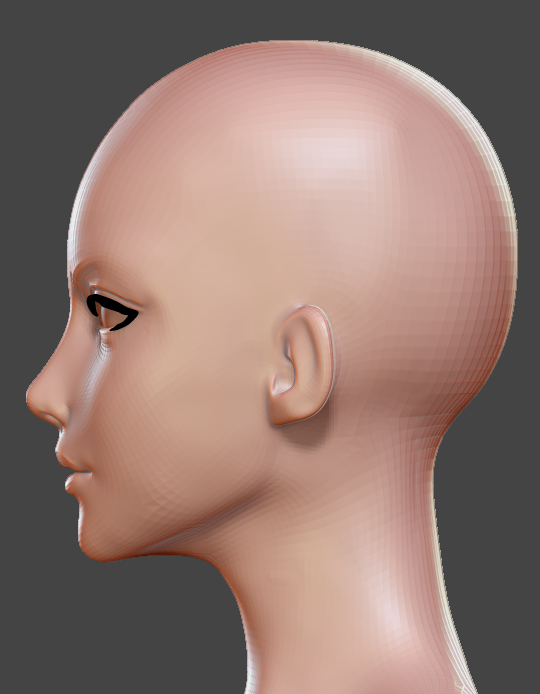

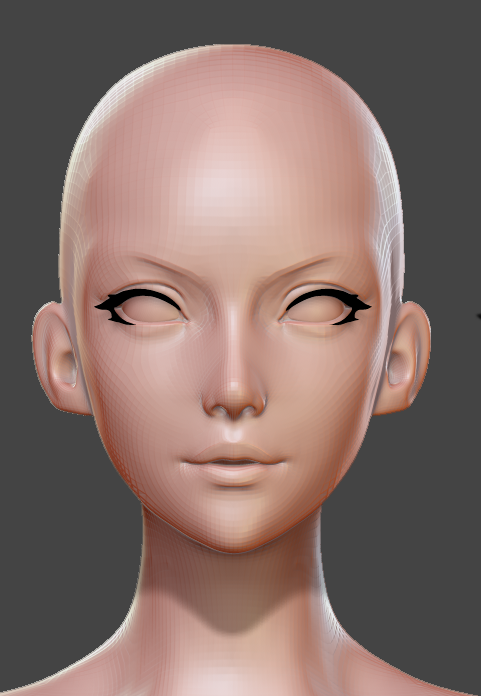

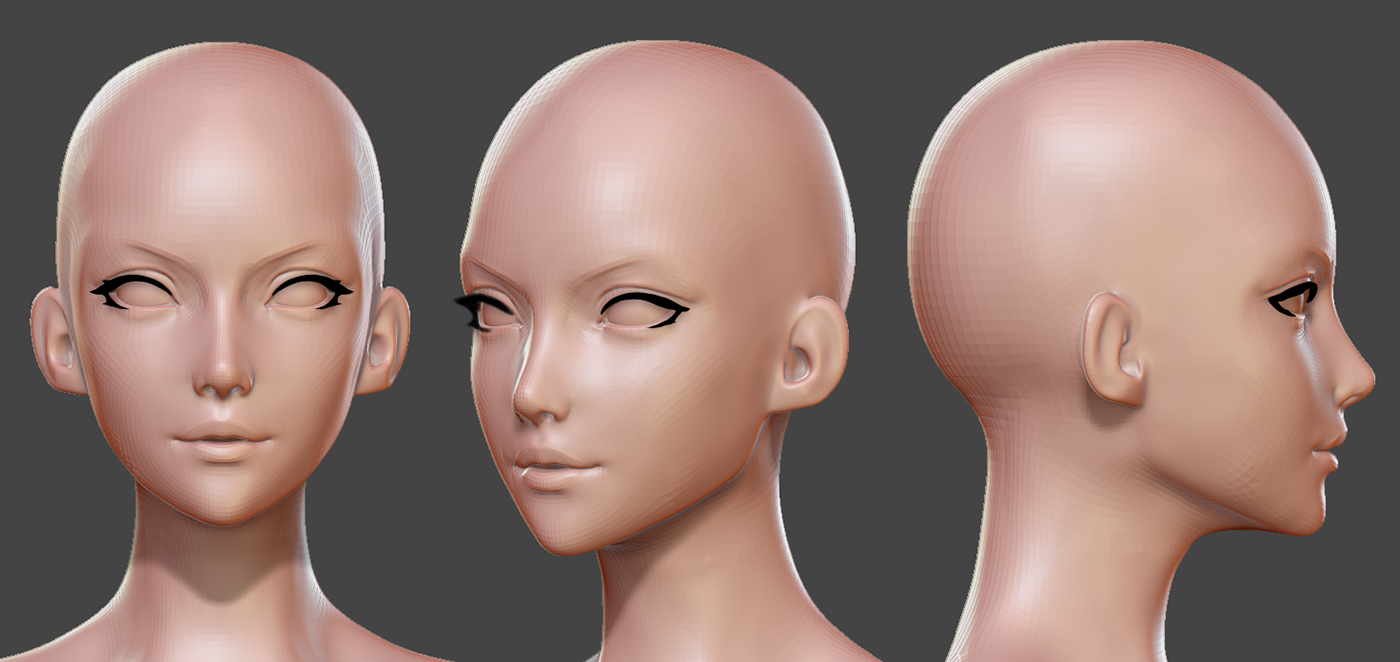

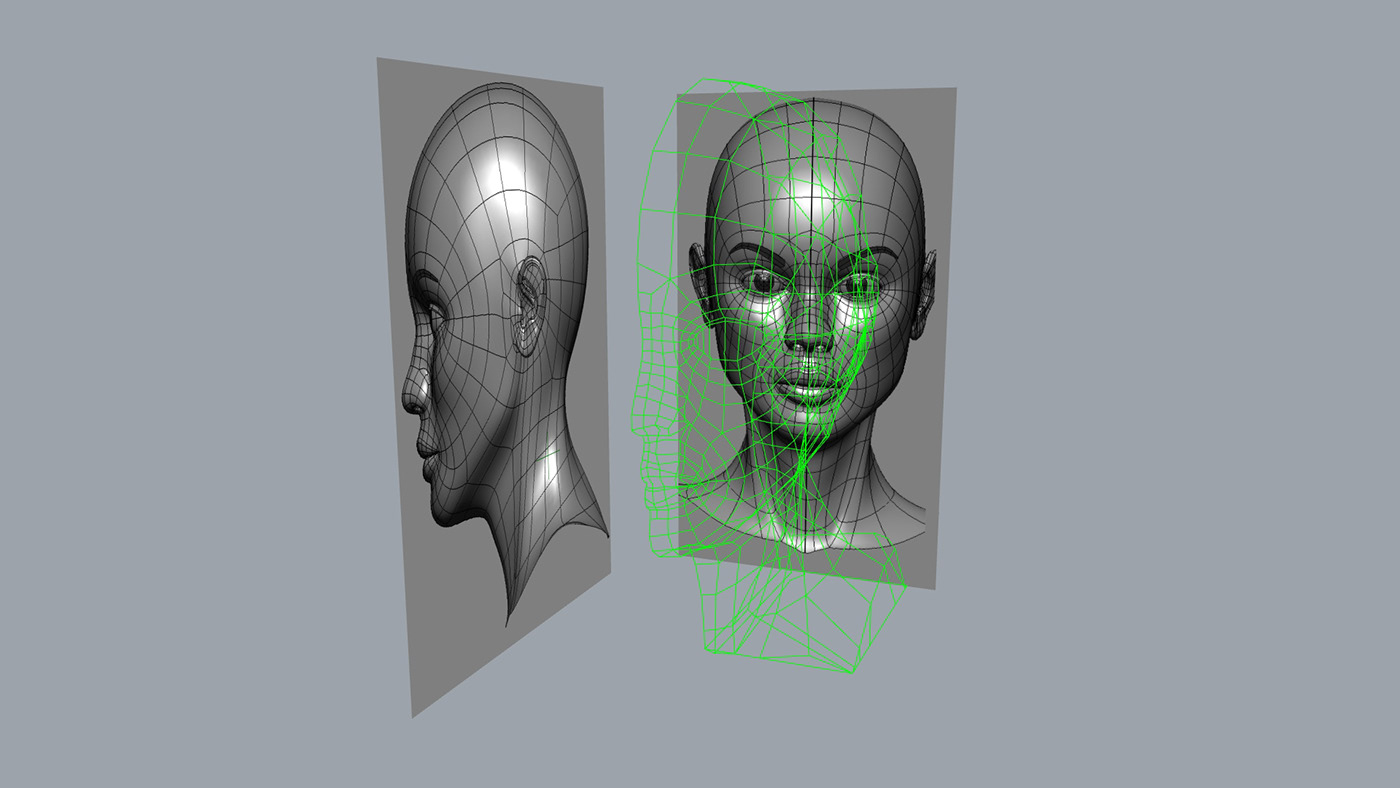

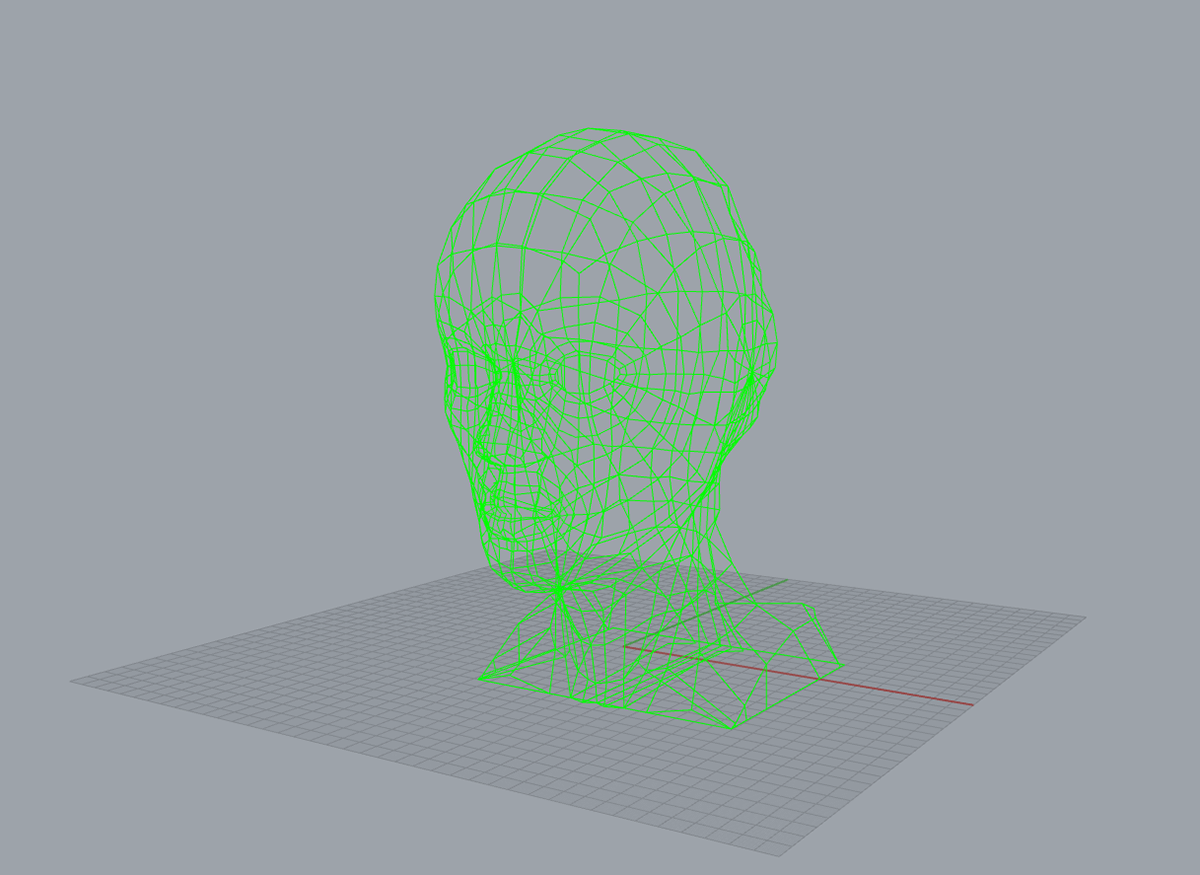

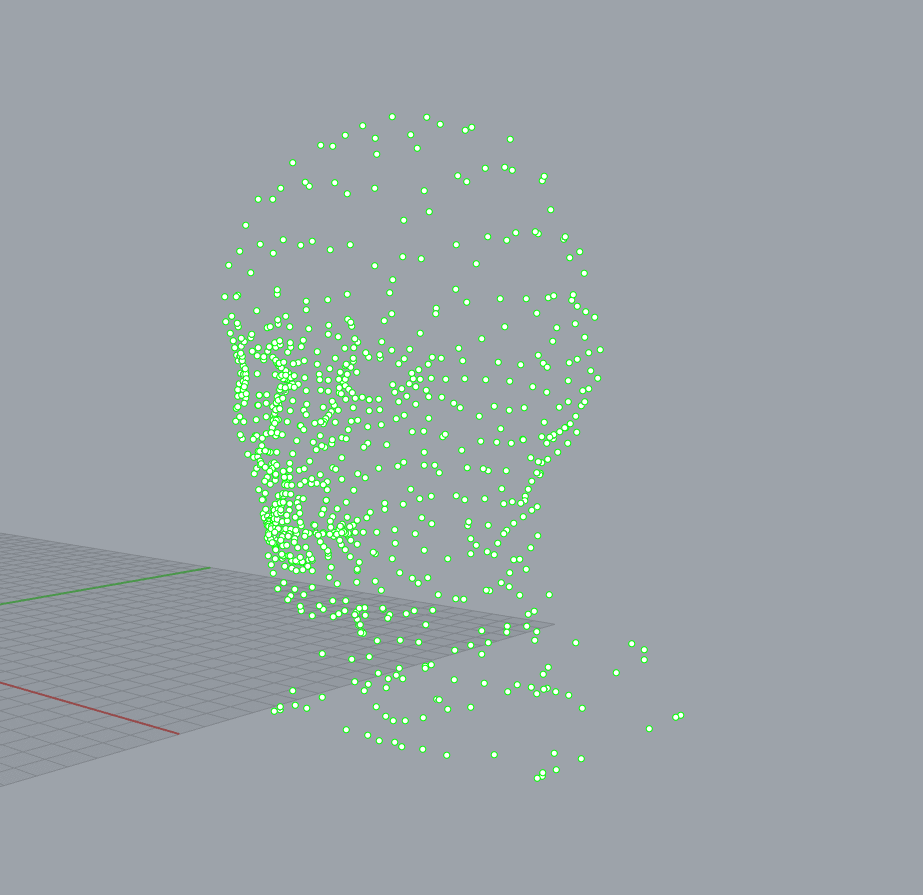

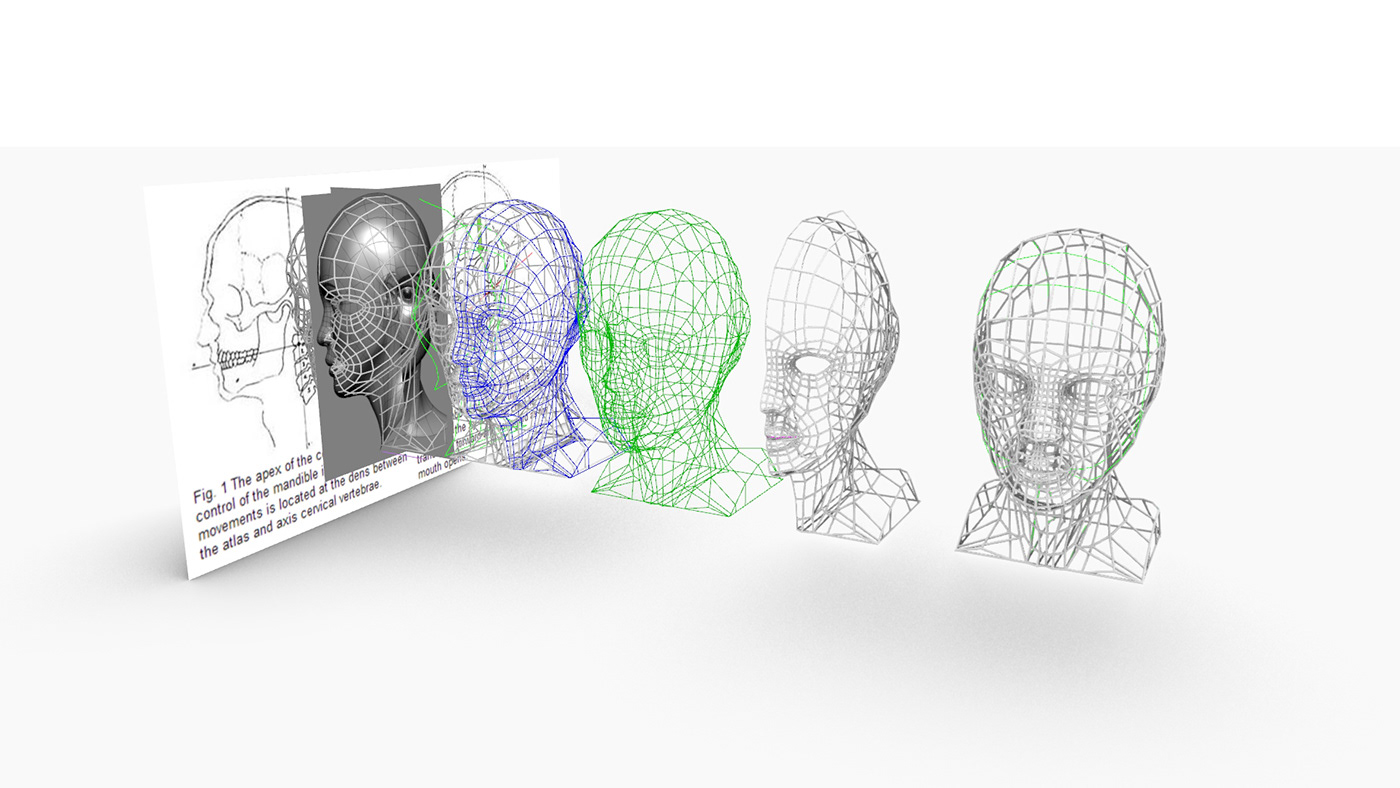

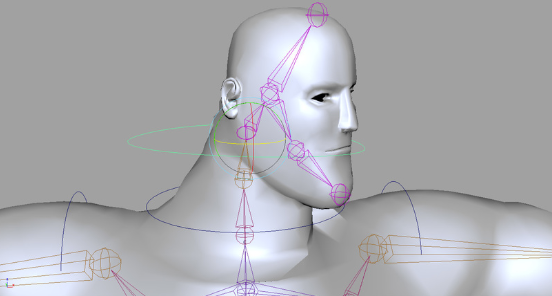

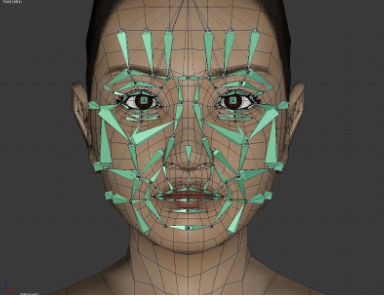

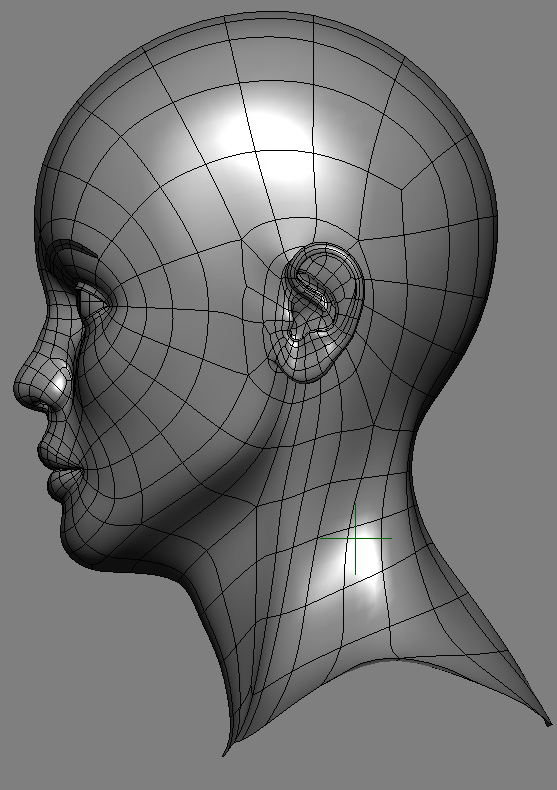

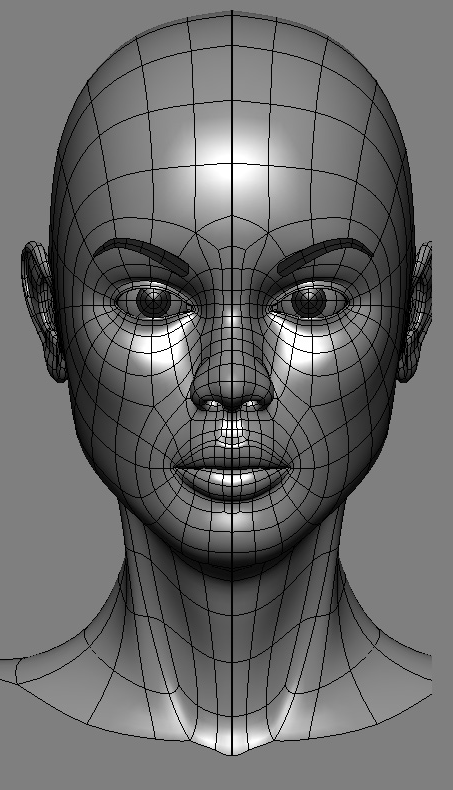

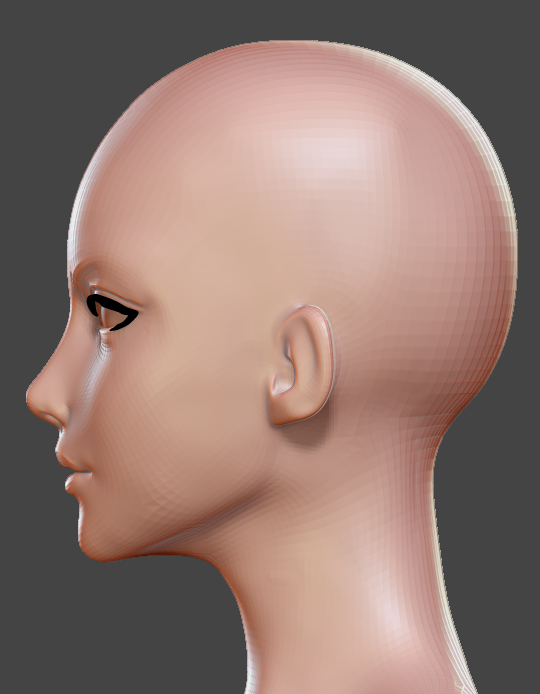

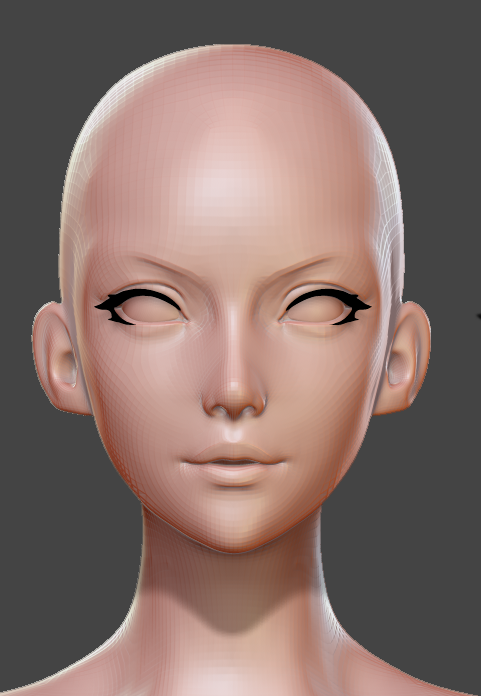

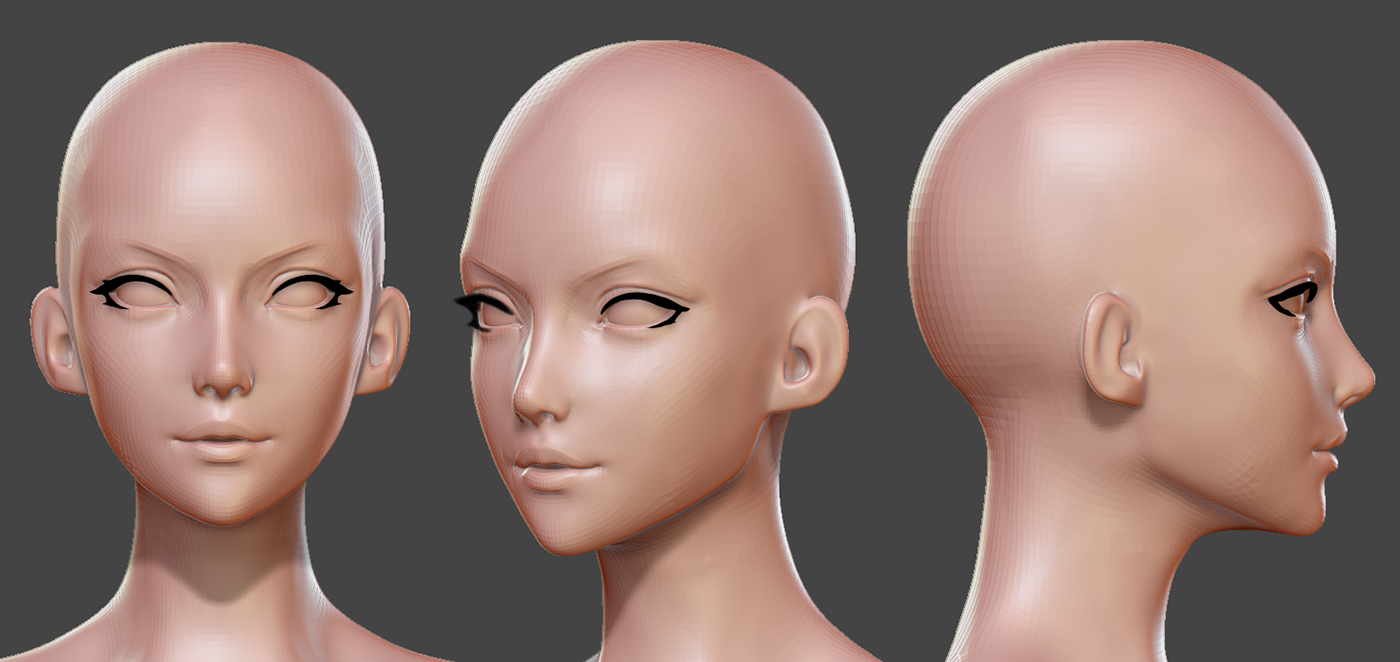

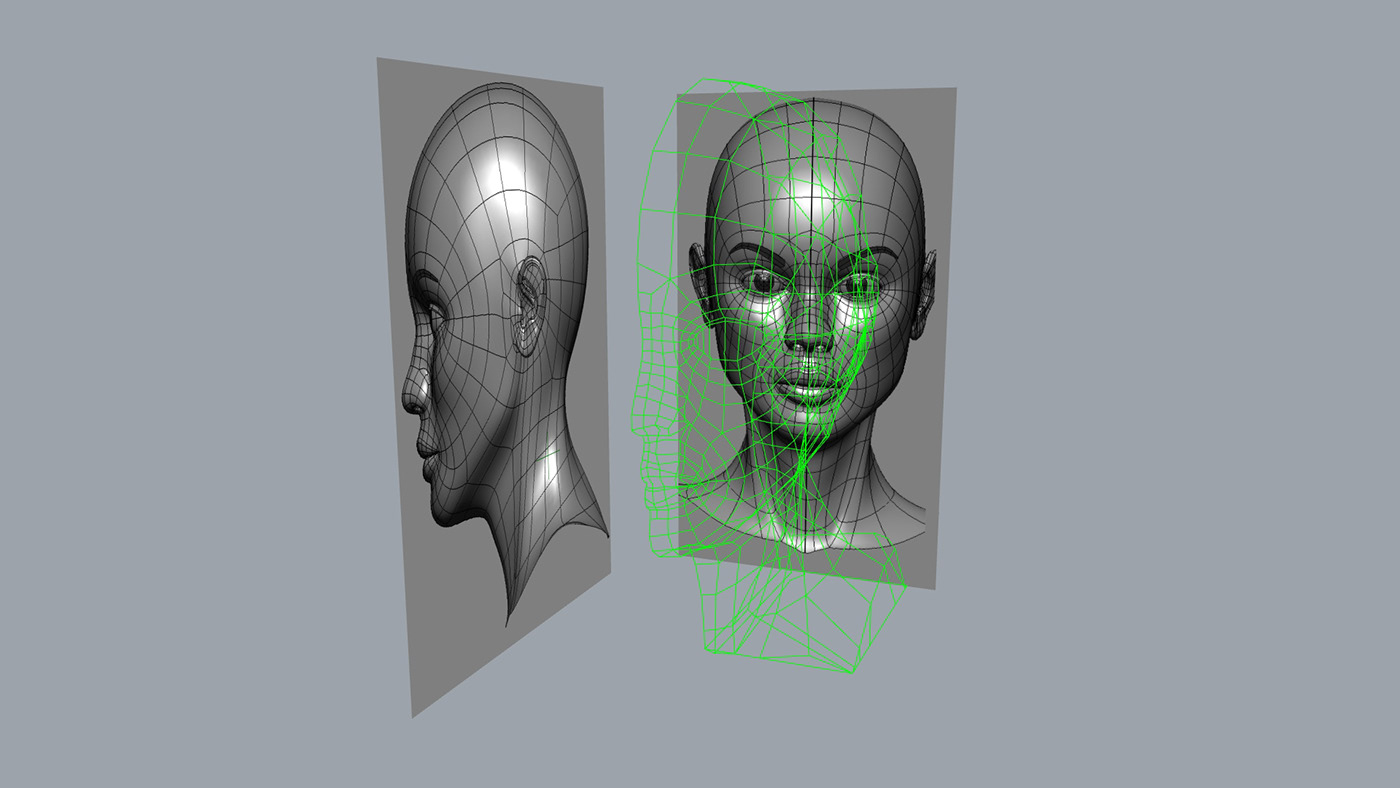

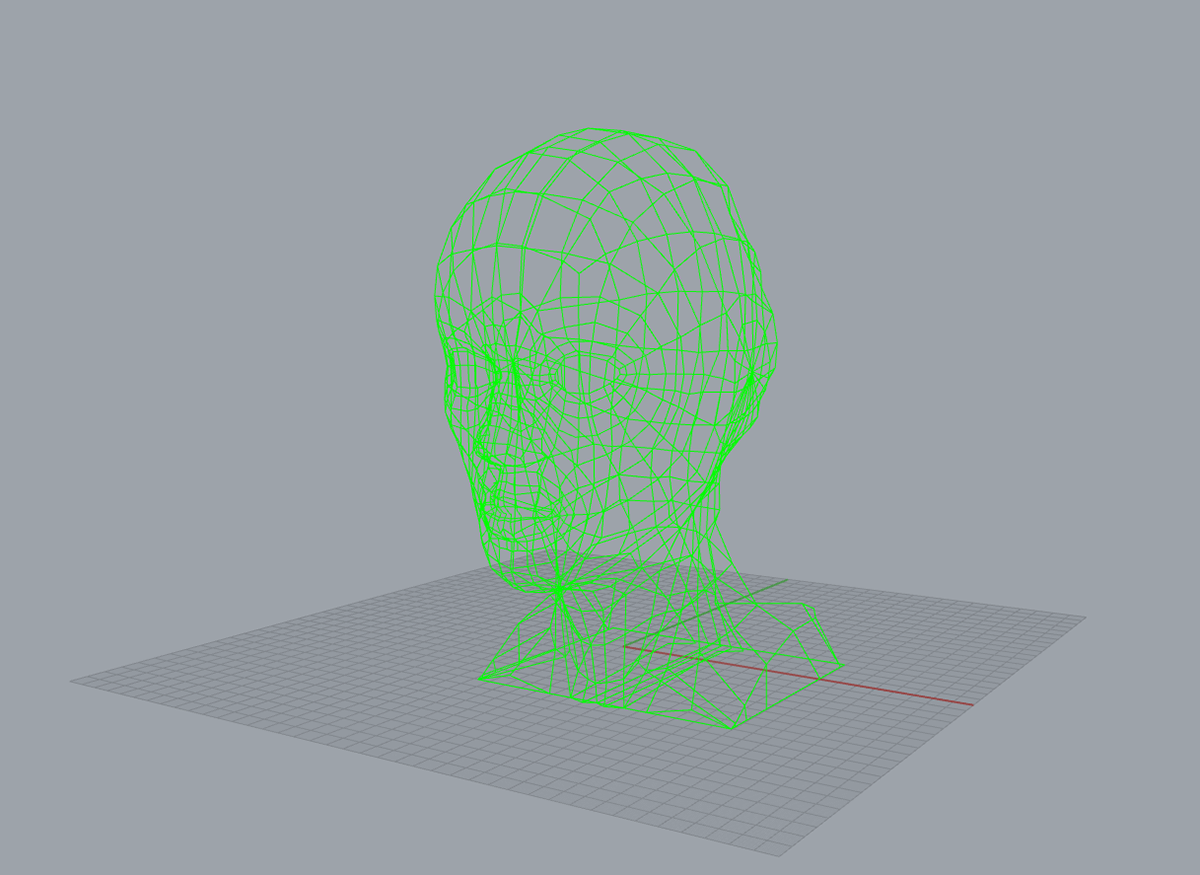

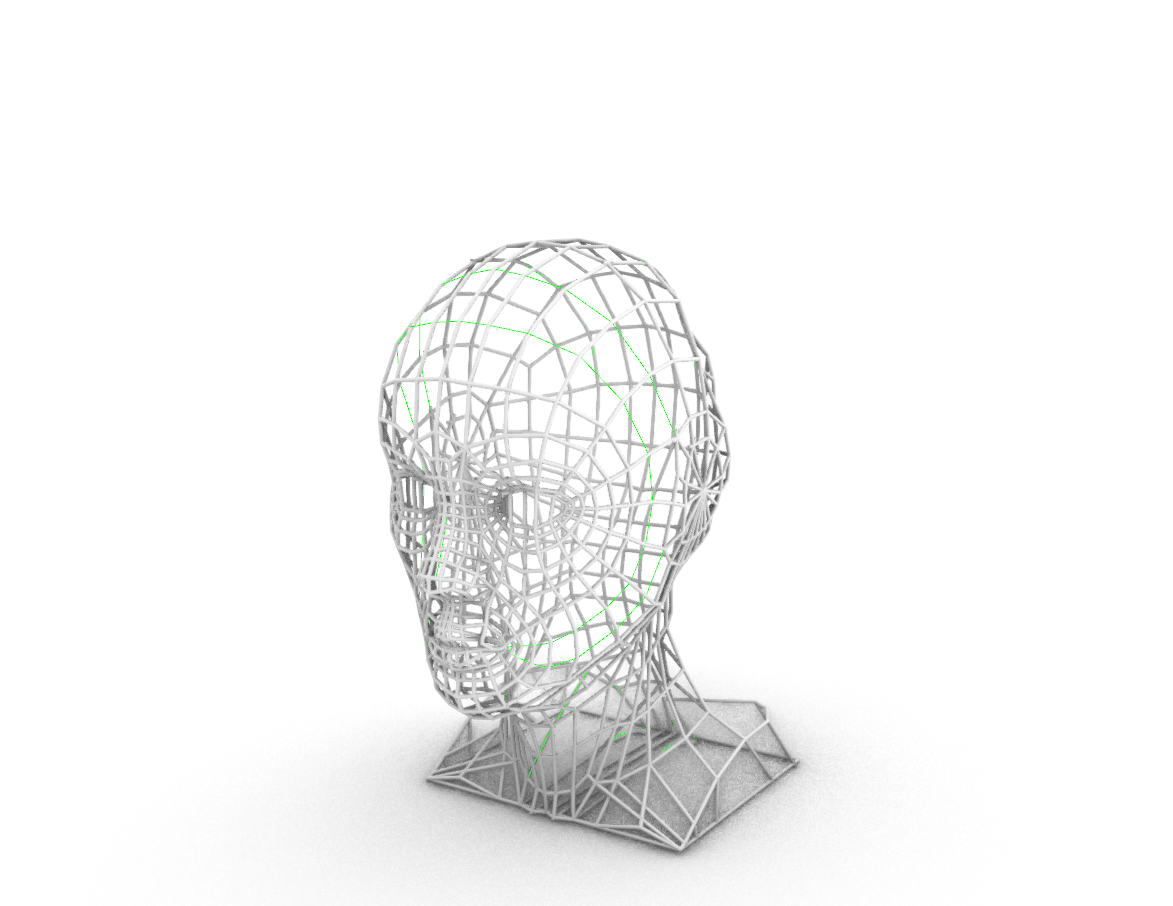

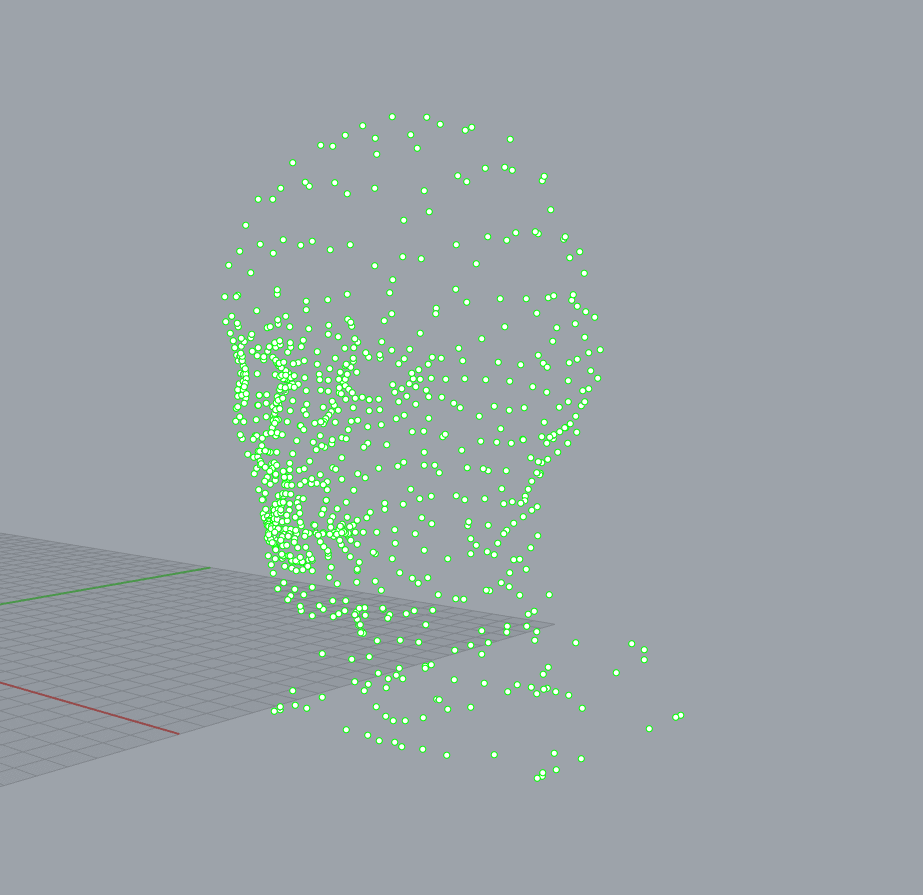

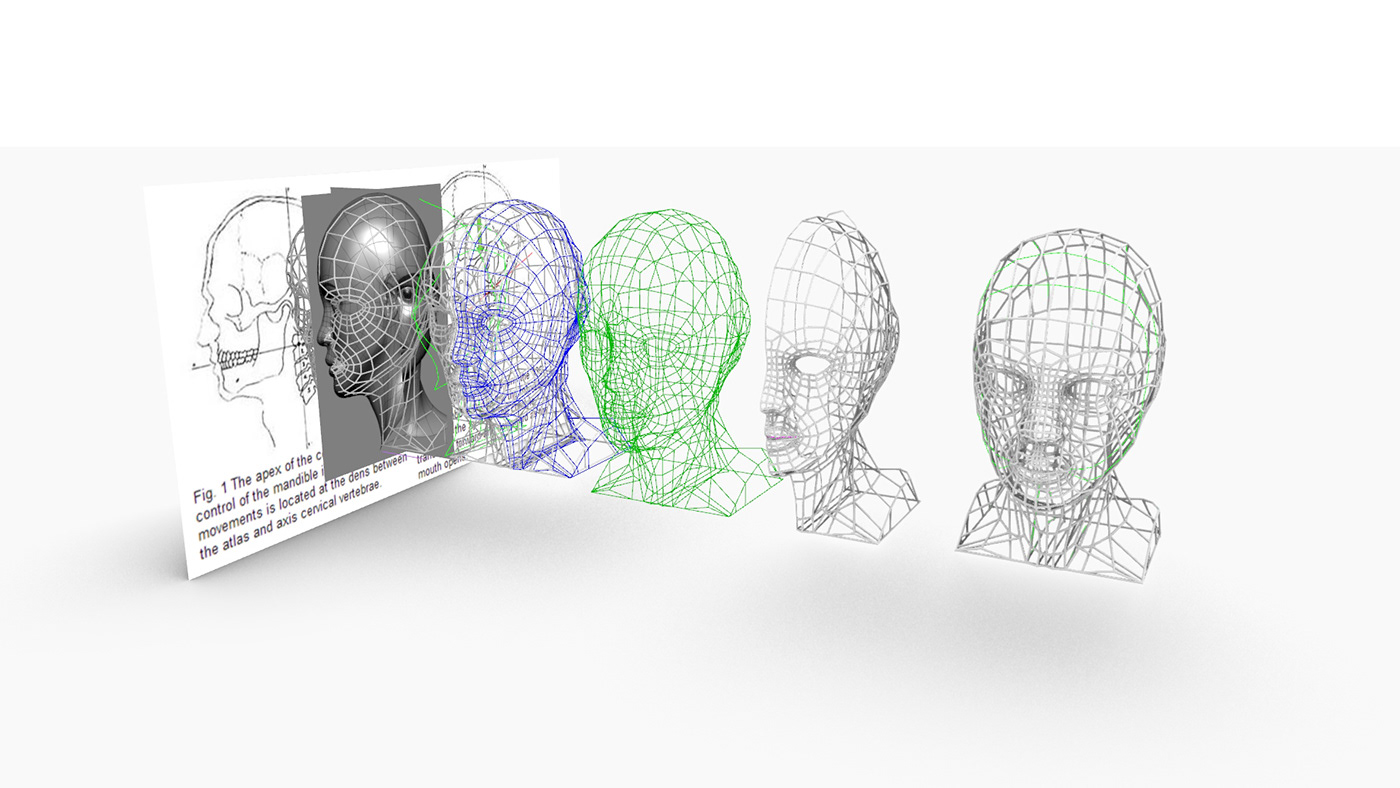

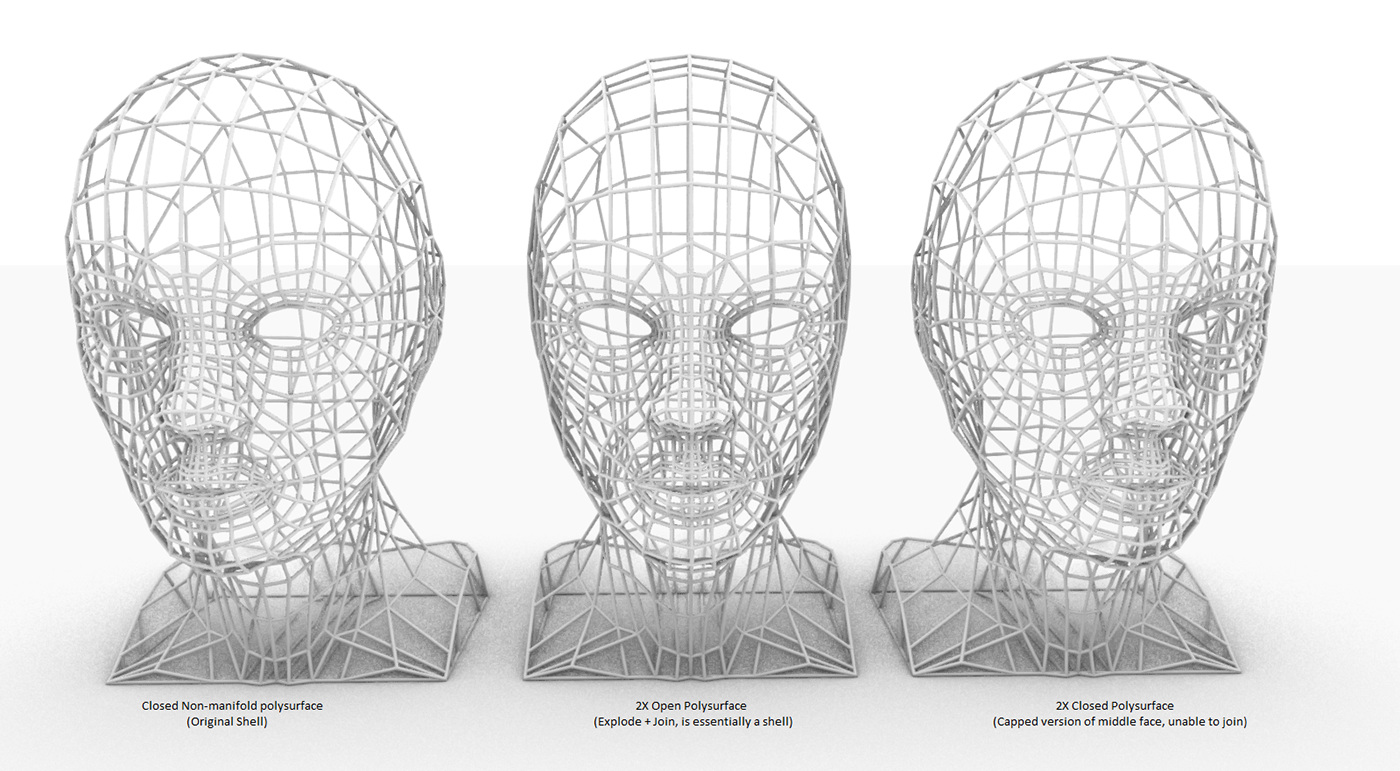

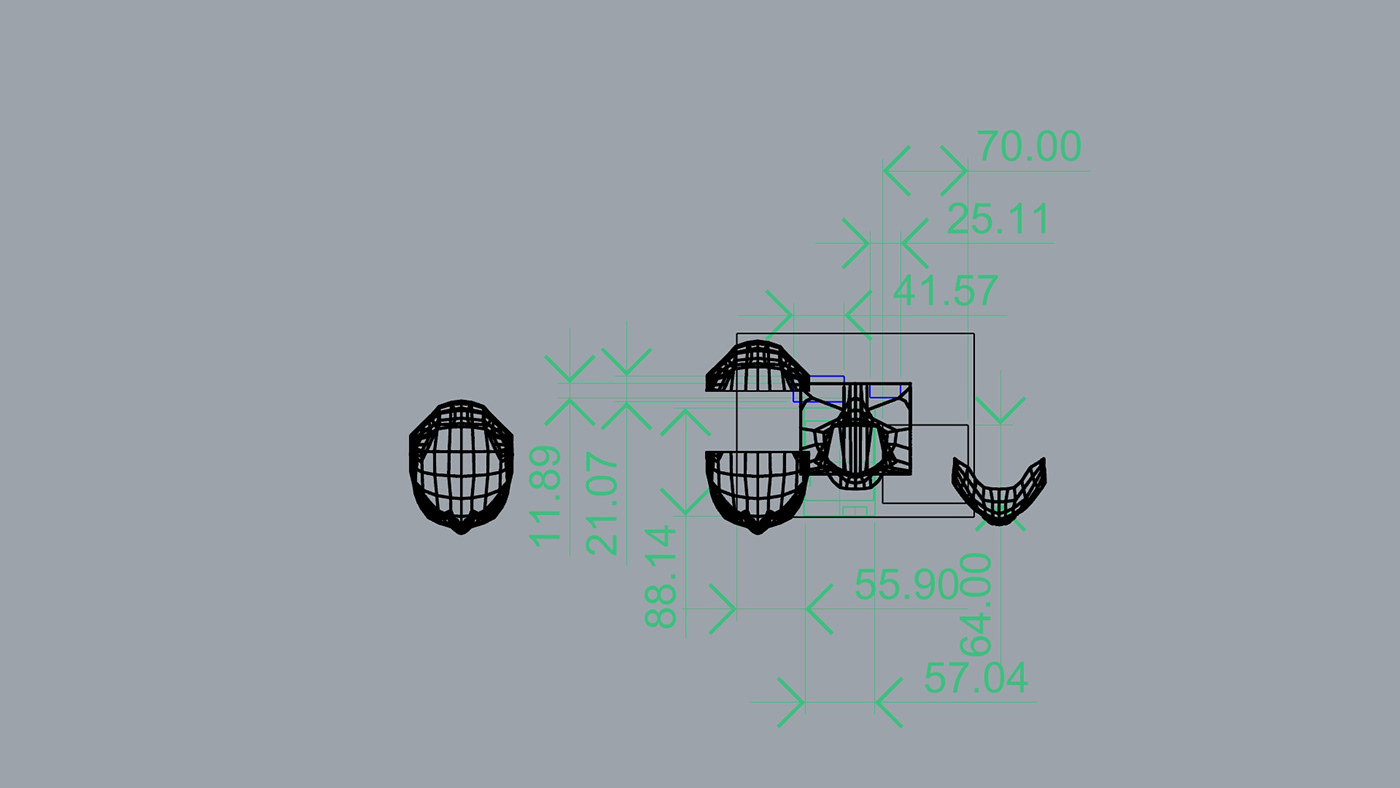

The 3D model was created by referencing human faces, then curves were used to create the low poly aesthetic that is prevalent in sci-fi movies. Individual kinetic parts will be exported and printed.

This documentation will serve as a guide on my ideation process of how I tackle this project.

Specifications:

Minimum Scale(in cm):

Head Lateral width: 14.8 | Eye-to-chin height: 8.1

Head height: 16.7 | Ear width: 2.2

Ear height: 4.5 | Head frontal width: 12.0

Head circumference: 42.8

Equipment List:

- FormLab's Form 2 SLA Printer

- Clear SLA Filament

- 0.6mm Nylon String

- 2x Servos

- Raspberry Pi 3B+

- Sandisk 16GB MicroSD Card

- Kinobo Mini Microphone

- Clear Acrylic

Main Portions of Research x Fabrication x Mechanism x Network Integration

![]()

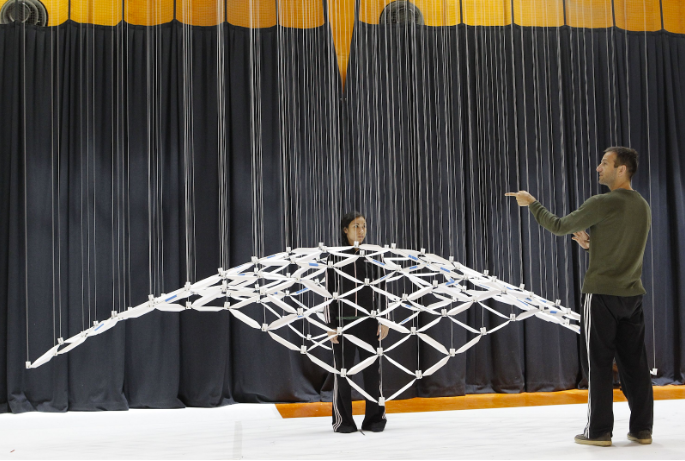

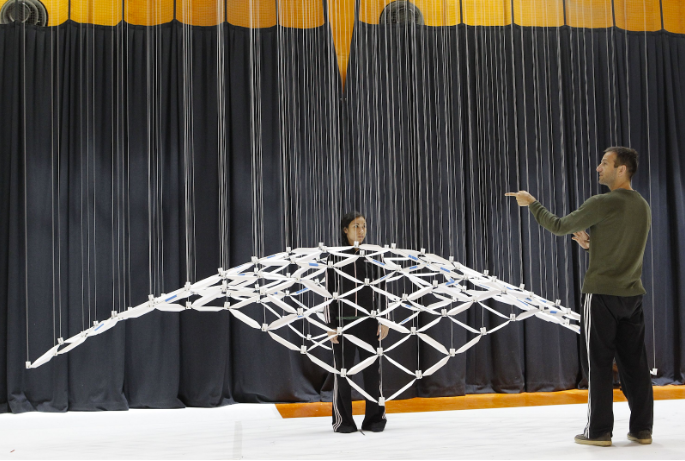

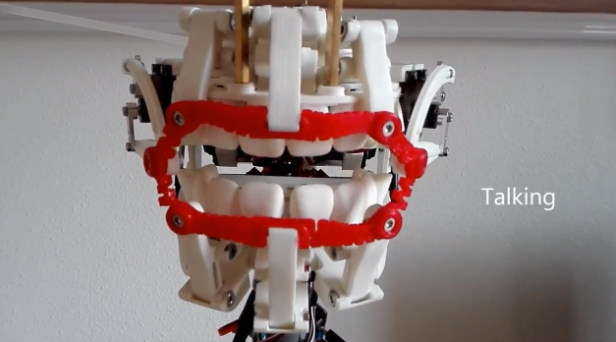

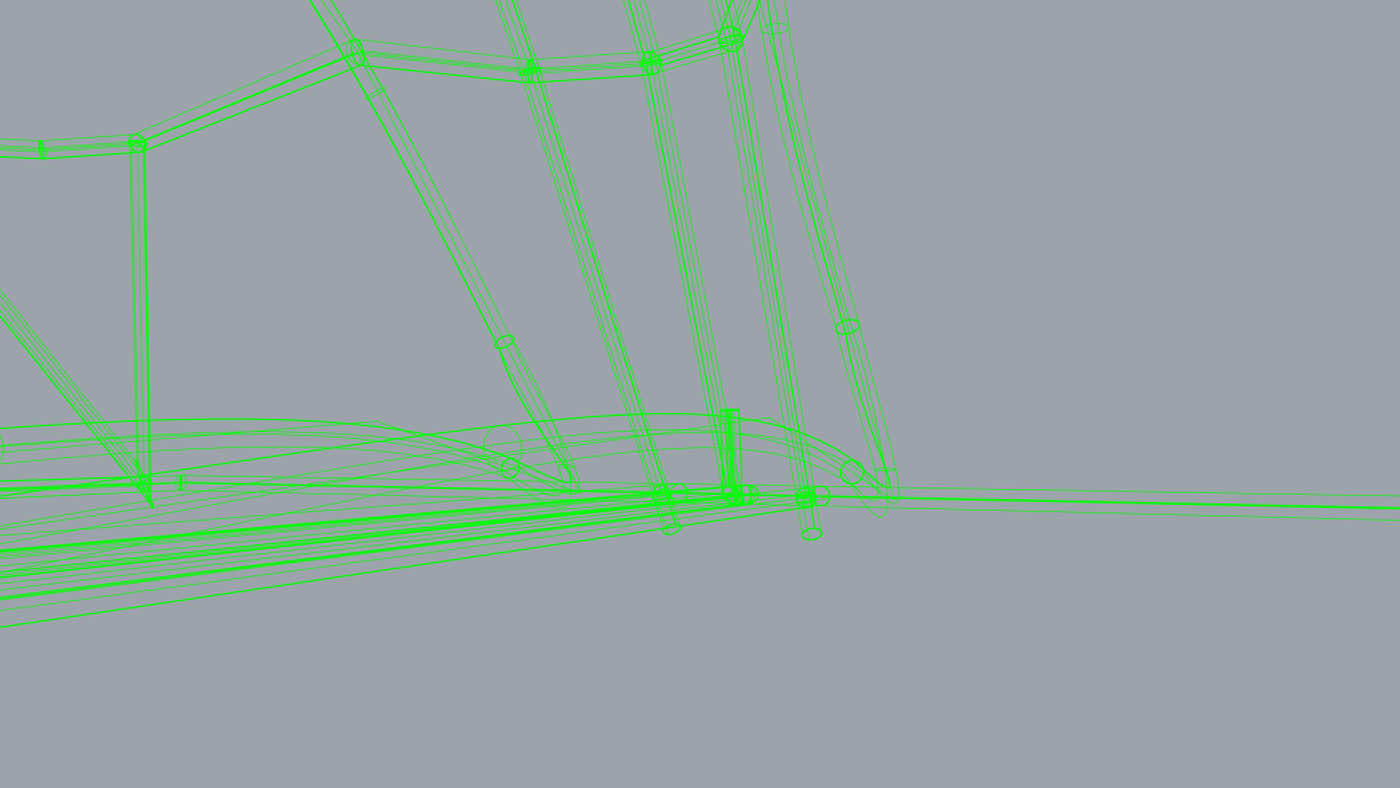

The idea initially was to create a system similar to kinetic sculptures through the use of strings that adjust their Y axis to create movement but because of the limitations of the axises, it might seem dead or too mechanically stiff.

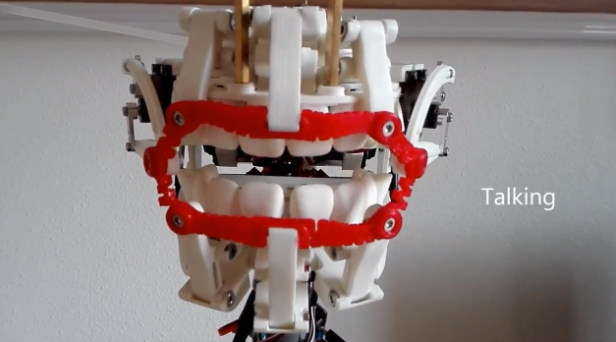

After consultation, it seems like a living hinge system on places of movement would help the movements. The idea is adopted from animatronics and would require 3D printing on elastic or flexible filament(ninjaflex) for those portions. The portions would be the mouth(specifically the lips), the eyes(specifically the eyelids) and the point of rotation for the head.

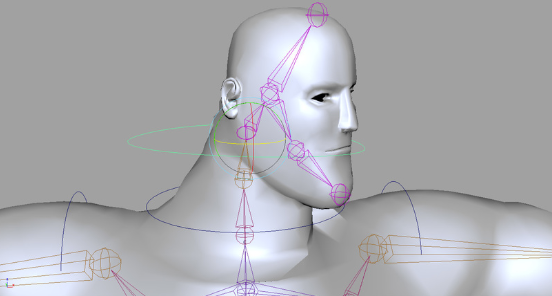

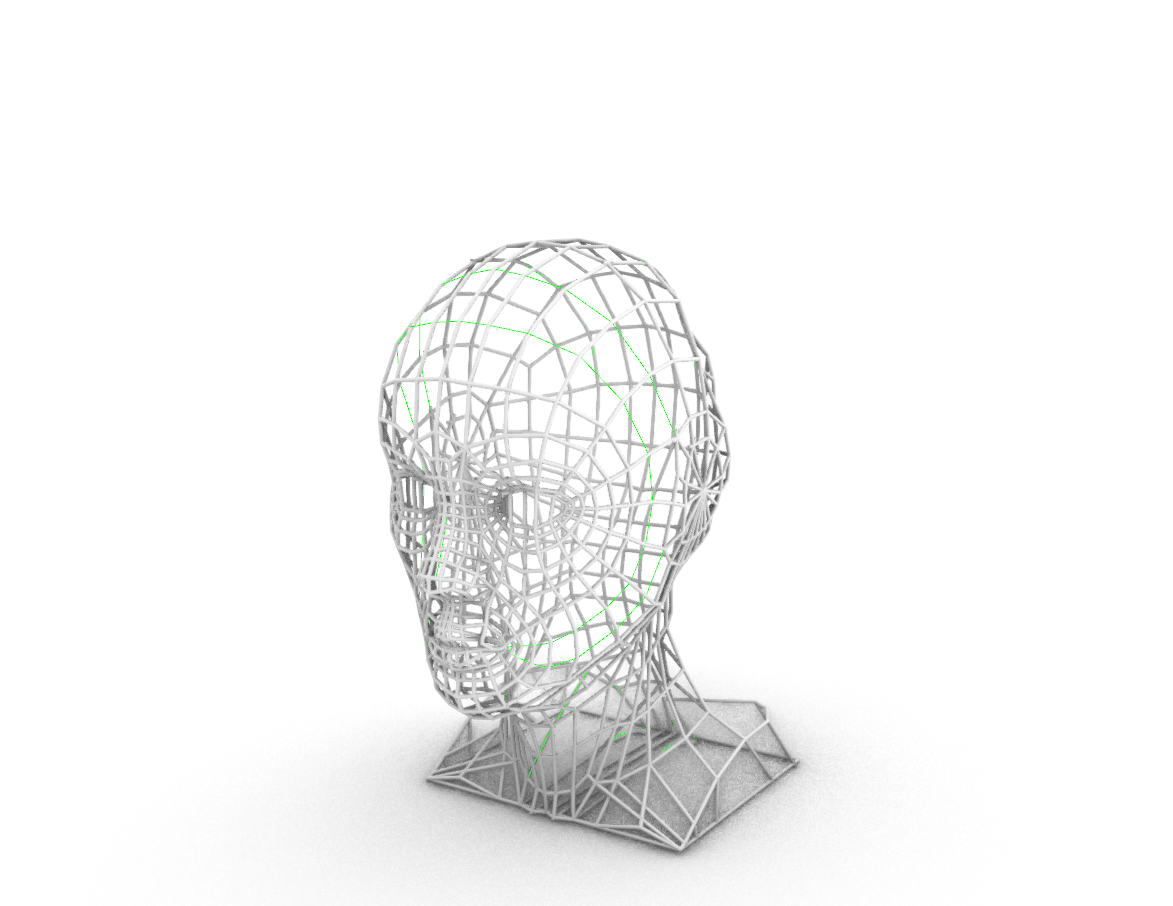

The rest of the portions would be 3D printed. The frames or skeletal poly structure would be 3D printed with PLA or resin printed in a clear filament. The structures will be hollow and held together by Nylon threaded through to pull flexible portions.

(not accurate representation of final outcome, only used for visualization)

The structure will be generally stable and unmovable from chin down, providing a base that holds structural integrity to support the entire sculpture. A solid structure should extend to the back of the head as well as there are no points of pivot.

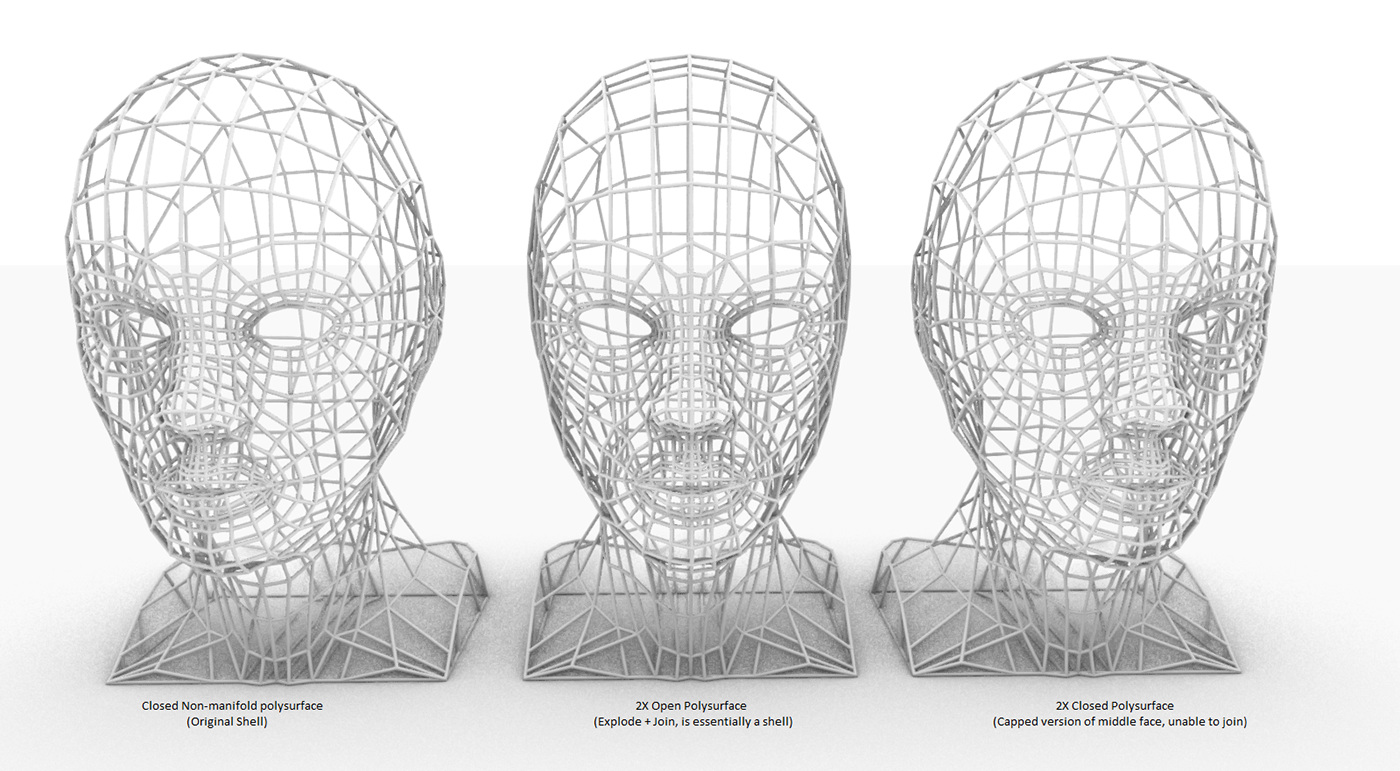

![]()

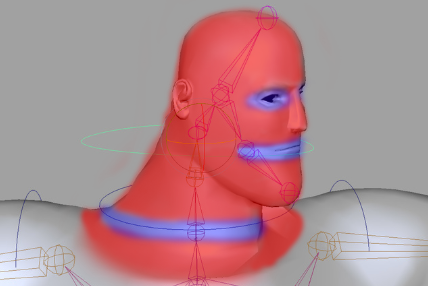

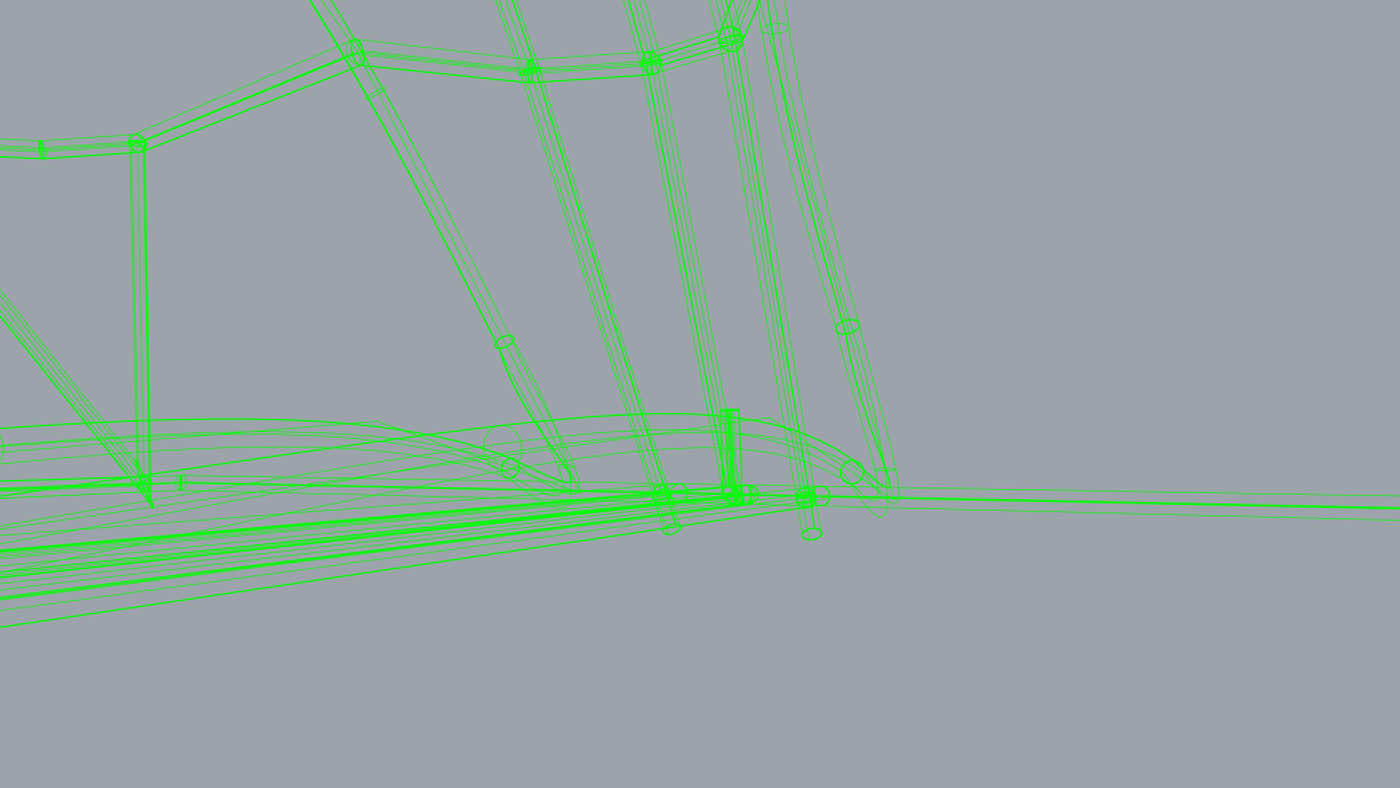

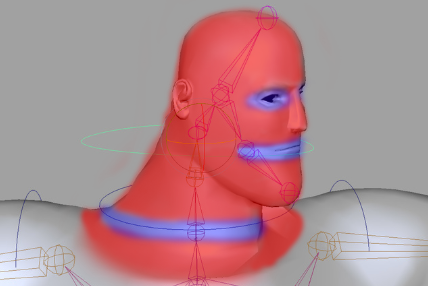

With the red parts indicating a solid PLA/SLA structure whilst the blue parts indicating a ninjaflex flexible joint to enable turns.

Nylon wires will be fed through all the tubes with joints being attached to the points of pivot on the Ninjaflex to creation movement.

Fabrication method could be a negative casting method to create a hollow shell for the nylon string to pass through.

ADAM will have 4 points of motion, head turn, head tilt, eye blink/squint, mouth open/close.

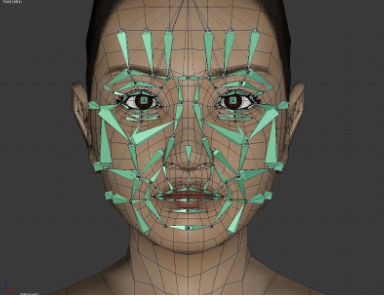

Facial mechanisms will largely depend on nylon strings being rigged to their respective motors. The eyes and mouth will work on a simple pull and release mechanism that is commonly used in costuming mechanism.

Because of the nature how the SLA works, it can be stretched and when pulled by a contracting nylon string, it will automatically close. The nylon will be threaded through the 3D printed tubes to reach their respective destinations.

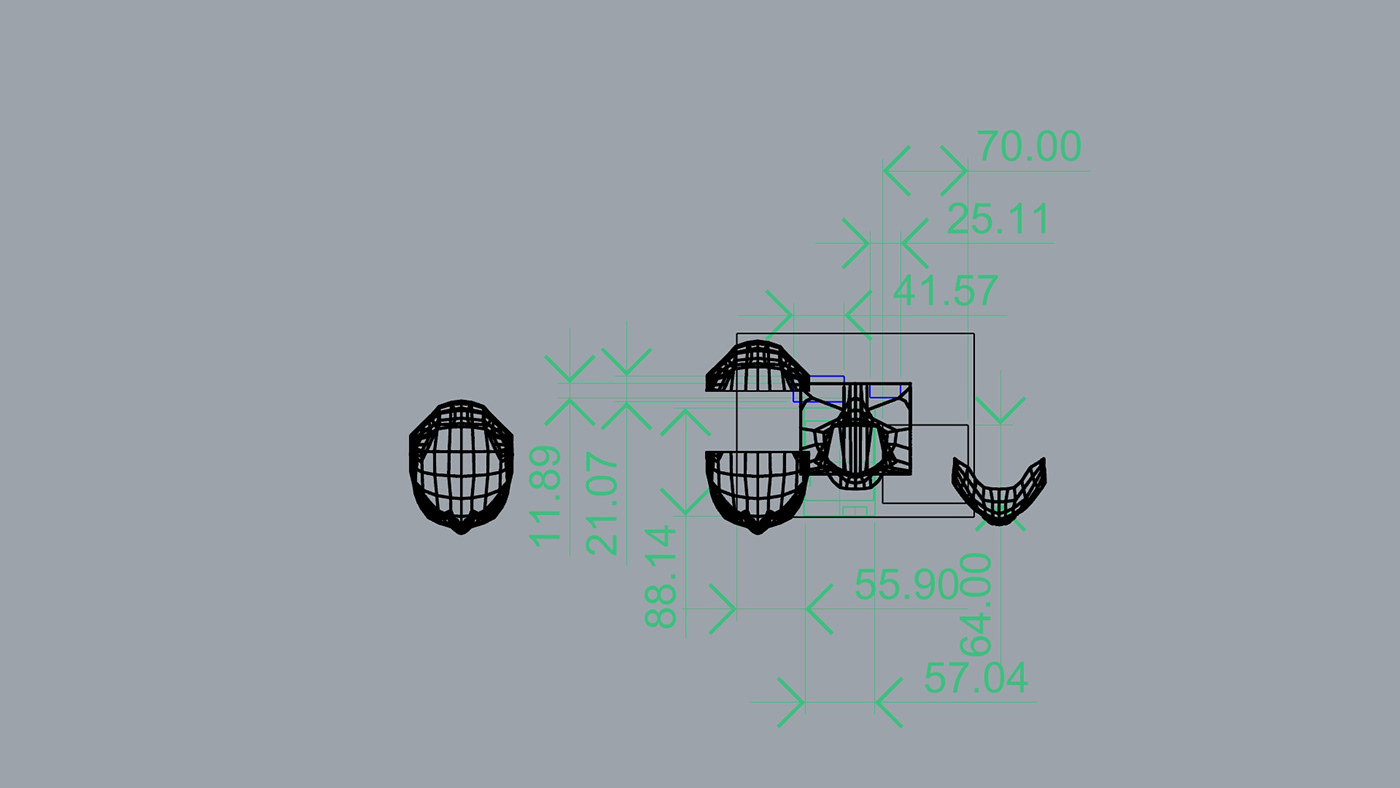

![]()

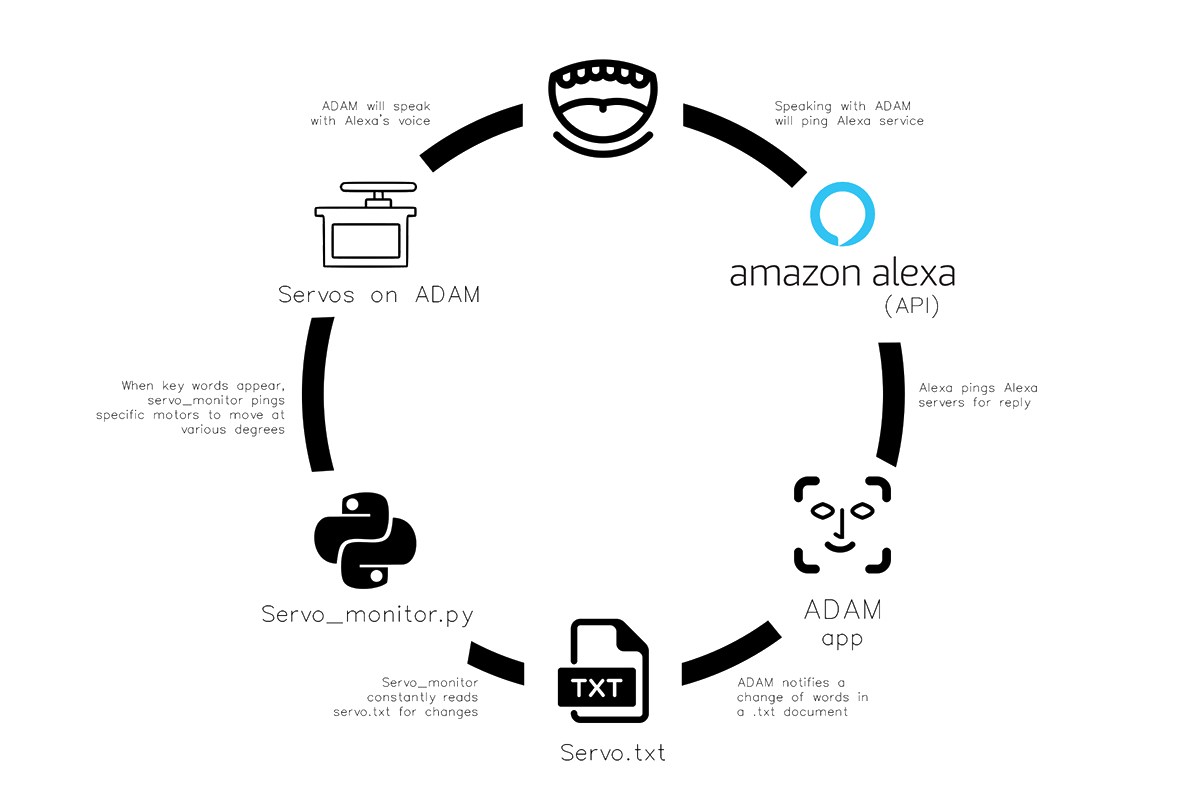

The Raspberry Pi 3B+ will be integrated with Amazon Alexa application.Configuration of Alexa onto Raspberry Pi.Control DC Stepper Motor using Raspberry Pi.

Motor Control:Wake(upon activation) - Head Tilt Up - Pull onceSleep(upon deactivation) - Head Tilt Down - Release onceTalk(upon command) - Mouth opens and closes - Pull and release loopBlink(every 10 seconds) - Eye opens and closes - Pull and release once

![]()

SPECIAL THANKS

(Without them, this project would've never been such a success)

Adam Bachman, Amanda Agricola, Tim Devoe, Paul Mirel, Helvetosaur, Katie Zawadowicz, Benjamin Soh, Yeo Ying Zhi

The idea initially was to create a system similar to kinetic sculptures through the use of strings that adjust their Y axis to create movement but because of the limitations of the axises, it might seem dead or too mechanically stiff.

After consultation, it seems like a living hinge system on places of movement would help the movements. The idea is adopted from animatronics and would require 3D printing on elastic or flexible filament(ninjaflex) for those portions. The portions would be the mouth(specifically the lips), the eyes(specifically the eyelids) and the point of rotation for the head.

The rest of the portions would be 3D printed. The frames or skeletal poly structure would be 3D printed with PLA or resin printed in a clear filament. The structures will be hollow and held together by Nylon threaded through to pull flexible portions.

(not accurate representation of final outcome, only used for visualization)

The structure will be generally stable and unmovable from chin down, providing a base that holds structural integrity to support the entire sculpture. A solid structure should extend to the back of the head as well as there are no points of pivot.

With the red parts indicating a solid PLA/SLA structure whilst the blue parts indicating a ninjaflex flexible joint to enable turns.

Nylon wires will be fed through all the tubes with joints being attached to the points of pivot on the Ninjaflex to creation movement.

Fabrication method could be a negative casting method to create a hollow shell for the nylon string to pass through.

ADAM will have 4 points of motion, head turn, head tilt, eye blink/squint, mouth open/close.

Facial mechanisms will largely depend on nylon strings being rigged to their respective motors. The eyes and mouth will work on a simple pull and release mechanism that is commonly used in costuming mechanism.

Because of the nature how the SLA works, it can be stretched and when pulled by a contracting nylon string, it will automatically close. The nylon will be threaded through the 3D printed tubes to reach their respective destinations.

The Raspberry Pi 3B+ will be integrated with Amazon Alexa application.Configuration of Alexa onto Raspberry Pi.Control DC Stepper Motor using Raspberry Pi.

Motor Control:Wake(upon activation) - Head Tilt Up - Pull onceSleep(upon deactivation) - Head Tilt Down - Release onceTalk(upon command) - Mouth opens and closes - Pull and release loopBlink(every 10 seconds) - Eye opens and closes - Pull and release once

SPECIAL THANKS

(Without them, this project would've never been such a success)

Adam Bachman, Amanda Agricola, Tim Devoe, Paul Mirel, Helvetosaur, Katie Zawadowicz, Benjamin Soh, Yeo Ying Zhi

︎

︎